recent updates

I’m writing this in mid-2024. GPT-4, Claude 3.5-Sonnet, and Google Gemini 1.5 Pro are the world’s leading LLM models, and the ever rumored Llama-3-400B is supposedly just around the corner.

The LLMs are wildly capable: they don’t just write sonnets, or teach you about history, but they’ve even turned into amazing coding assistants. Sure, they still hallucinate sometimes, but most of the time it’s not worse than your average person (or stack overflow answer).

The problem is that no one knows what the future is going to bring–will RAG rule the day? will we need new models with flexible depth-of-compute as per Yann LeCun? will the scaling laws finally fizzle out? or will we simply run out of voltage transformers?–just that it’s going to happen soon. And with tens, if not hundreds, of billions of dollars in play, the mega AI labs feel like behemoths, ready to wipe out entire business models in a glance, or at least a model update.

So what do you do if you still want to play in this space?

The way I see it, there are three main options: find a defensible niche, adapt at the edge, or build foundations.

…read moreTwo python functions:

1

2

3

4

5

6

def print_status_and_execute(f):

print("running function " + f.__qualname__)

return f

def add(x, y):

return x+y

You might want to wrap them like:

1

status_printing_add = print_status_and_execute(add)

Which is kind of ok, except that now you have to change all your code wherever you used to use add to use the new status_printing_add function.

Instead, you can just decorate the function definition of add like so:

Intro

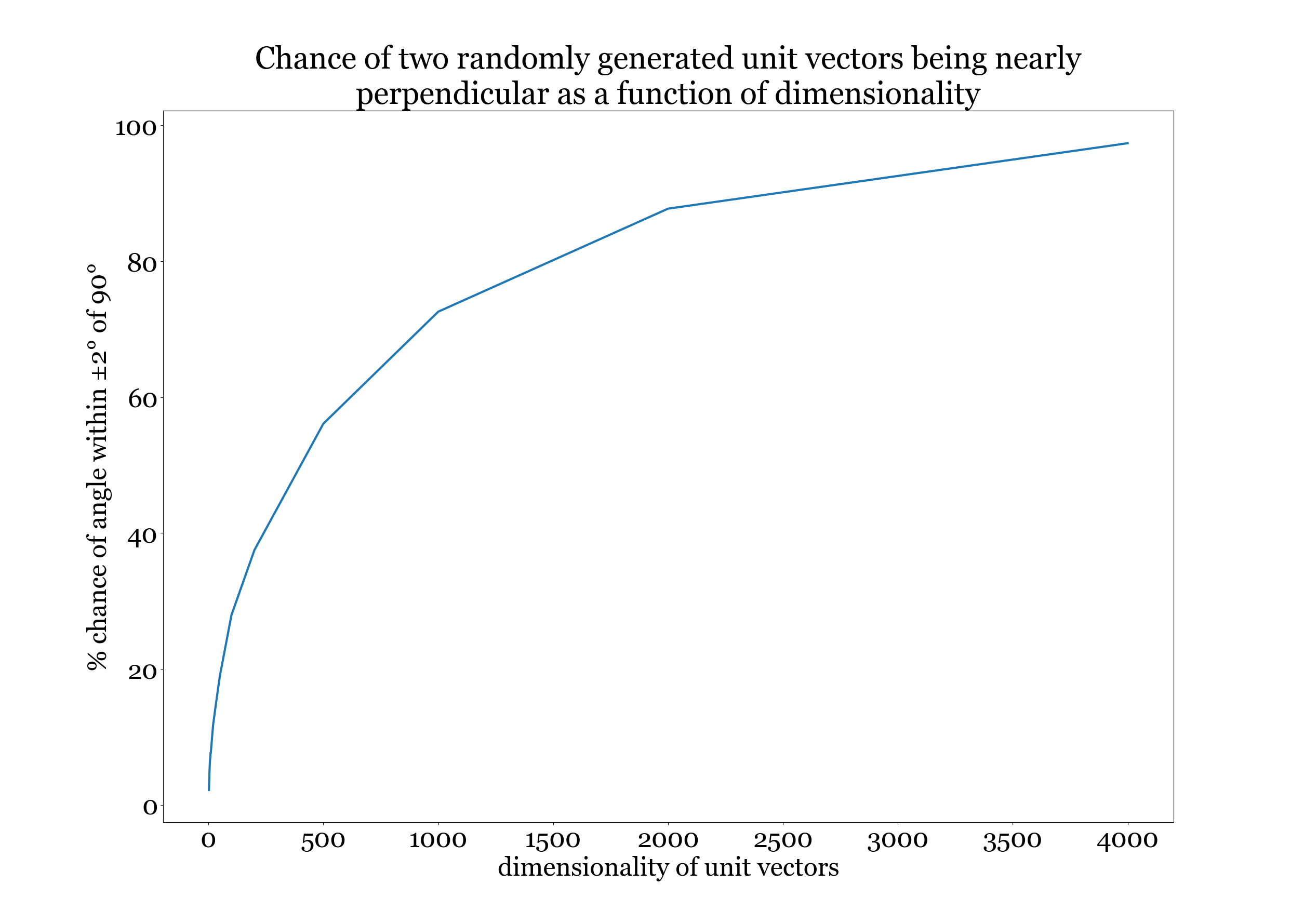

I’ve been meaning to run through the Fast.ai course on practical deep learning for about a year now, since I first learned of it, and it’s finally time. As usual, I’ll be burning down through this quickly, and thankfully have a pretty deep background in the gnarlier, more mathematical aspects of the field… (I’ve derived and implemented backprop by hand a number of times, and most recently on the professional side, was SVP product for a startup building LLM Inference HW, so I’ve become quite familiar with the underlying theory and architectures).

But now it’s time to roll my sleeves up and learn the hands-on tools.

…read moreShortbread Cookies

Makes about 3-4 dozen cookies

- 226g unsalted butter

- 90g powdered sugar

- 1 tsp vanilla extract

- 280g AP flour

- 25g cornstarch

- 1/2 tsp salt

For best results, measure ingredients with a scale by weight.

Mix together and refrigerate dough.

Bake at 350degF (175degC) in a preheated oven for about 13-15min or until slightly golden brown.

Sernik (Polish Cheesecake)

…read more

Update - 1/04/24

Well, this is a few years out of date. The whole pandemic lasted a lot longer than I think anyone really expected. Especially with the California lockdowns.

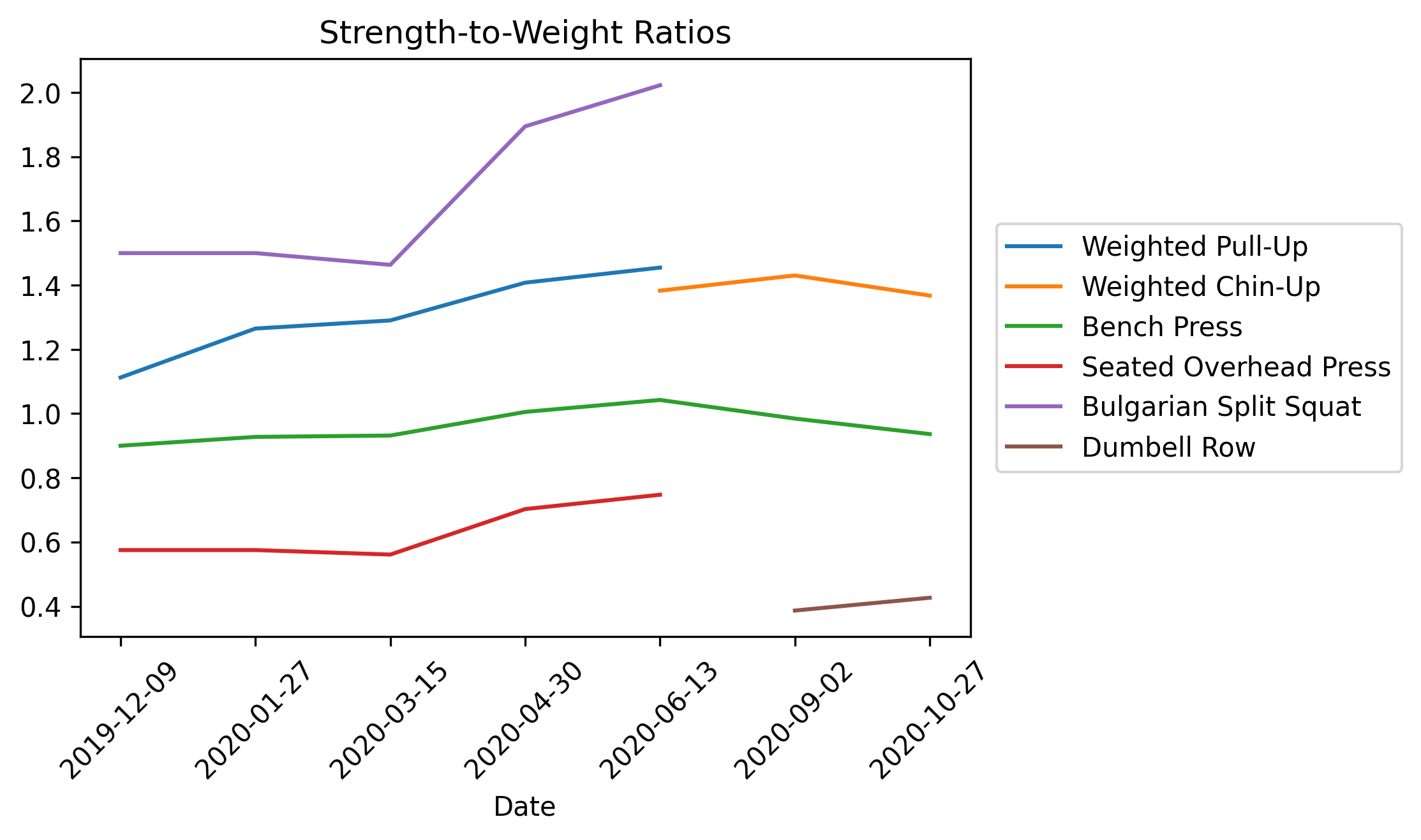

I wish I could say that left me with smooth and continuous progress, but it wouldn’t be true. We moved, had a kid, and I encountered a small host of minor, but lengthy, injuries. Along the way, I ended up making some changes to my routine.

For lifting, I switched out of the tactical barbell program (with its focus on 1RM testing), and into a more “bodybuilding” style of lifting. 5 week long phases, with a lot more volume (sets of 10+), and a gradual peaking followed by a recovery week. I started this new routine in mid-December, 2020, and am currently halfway through Phase 19. At 5 nominal weeks / phase, that means I’ve been about 60% efficient with my lifting compared to an ideal schedule.

I’ve also ended up with a better table tennis setup at home. I’ve now got a table, robot, and most importantly, enough room to use it, so drills with that have become a regular part of my routine. I’ll write about my shot accuracy tracking system in a later update.

…read moreThese notes are a stream-of-consciousness response to reading through The Twelve-Factor App at the suggestion of a good friend of mine.

Introduction and Background

Nothing particularly special here. At this high of a level of abstraction, everything sounds like a good and reasonable idea!

Section 1: Codebase

Not too much controversial in here (I hope!). I like the emphasis on strict delineation of codebases between apps, and factoring out shared code into separate libraries under dependency management.

Section 2: Dependencies

…read moreI know some people headed to London soon, so for them and anyone else, here are my London recommendations. Do note that these all date from my trips before the onset of covid-19, so you should check the actual availability / openings of things for your specific visit.

…read moreI’m back with another round of course notes, this time from Coursera’s Machine Learning, by Andrew Ng, as well as the entire Deep Learning Specialization sequence.

My history with these courses and material was curious. Much of the background mathematics, including calculus, I became extremely familiar with through the course of my physics degree years ago. Then, back around 2011, I went through the first run of Caltech’s Learning From Data online class which was, as is usual for Caltech, a tour de force of even more math, to really drill to the core of some of the most fundamental concepts in the field of machine learning. But at the time, it was obviously missing some of the more recent focus on neural networks, so I put in a mental bookmark to return to the subject and catch up on more recent developments once I had the opportunity.

…read more

I talk a lot about comparative advantage, and finding ways to trade on it. But one of the things I’ve never directly defended is the idea that strong comparative advantages can exist, and that you can improve your chances of developing such comparative advantages by studying more or less anything at all.

…read more…read more– tl;dr –

To communicate well, apply techniques of error-correcting conversations, especially whenever someone says something that sounds dumb, or otherwise doesn’t make sense:

Make sure you’ve heard their words correctly. “Did you just say <the words you heard>?”

Use your understanding to create a prediction for an implication outside the immediate bounds of what they’ve said, and see if their understanding agrees with your prediction. “Does that mean that we would also expect X/Y/Z?”

Rinse and Repeat.

Management is about developing comparative advantages, both within yourself, and among your teams. The goal for yourself is to minimize context switching costs on a daily basis. The goal for your teams is to ensure that no matter what surprise tomorrow brings, your team has a spread of comparative advantages that will let it tackle the work with efficiency and effectiveness.

As an addendum, when hiring, forget the adage about “T-shaped people”. Instead, seek out and hire Koosh ball people: people with multiple, separate areas of deep skill. This maximizes the chance of your team members having really distinct and applicable comparative advantages, no matter the challenges ahead.

Leaders have two jobs: to cultivate pervasive trust, and to delegate well.

There’s a lot said about culture, and most of it is BS. The only thing that matters in a startup’s culture is whether or not all members of the company share a deep trust with each other. The operative question is “Would they take a bullet for me?”. When asked from anyone’s perspective, and directed at anyone else, the answer had better be “yes”. As a leader, judge every action, policy, incentive, and plan with regards to whether it deepens or depletes trust.

Delegation is a two-way contract. It must have a clear vision and strategy defined from above, and tactical authority and true acceptance from below. The delegator specifies the goal and the delegatee specifies what they need to accomplish it. Vitally, the delegatee must truly be able to say no if they deem the task impossible given the resource constraints.